Clustering and Dynamic Loadbalancing

Communication Between Components

Each Ceptor Component uses the Peer2Peer communication method – this can be compared to RMI, in the sense that a peer sends a command to another, which executes it and optionally sends a reply. The data being sent is a mix of externalizable and serialized java objects, in a format optimized for both performance (primarily) and for size. If the blocks of data exceed 32K, they are compressed to reduce the size – this will primarily happen with log records being sent to the log server, since most blocks are well below 100 bytes.

Sample Configuration

Following is a sample configuration for setting up 2 servers in a cluster – it shows which settings to change, and where to do it.

In the following, we assume that the DNS names of the two servers are ppserver1 and ppserver2.

Log Clients

A log client’s configuration is stored in log4j.properties or log4j.xml – see log4j documentation at http://jakarta.apache.org for details about these files.

A sample configuration of a log4j appender looks like this:

log4j.appender.ITPLog=dk.itp.peer2peer.log.RemoteLogAppender

log4j.appender.ITPLog.LocationInfo=false

log4j.appender.ITPLog.Servers=ppserver1:21236;ppserver2:21236

log4j.appender.ITPLog.PeerName=SomeAgent

It tells the log4j appender to first try ppserver1 at port 21236, and if it cannot connect to it, it will go for ppserver2 at port 21236.

Statistics Server

The statistics server needs to know which config servers to connect to, and retrieve statistics from – in ceptor-configuration.xml the following property must exist for the statistics server:

statistics.sources = ppserver1:21112;ppserver2:21112

It tells the statistics server to collect statistics from the configuration server at ppserver1 and ppserver2 at port 21112.

Agents

An agent needs to know the addresses of the various configuration servers, so in either the system property portalprotect.config.servers or in the property config.servers in the file ptserver.properties, you need to tell it of the different config servers that exist.

config.servers=ppserver1:21233;ppserver2:21233

This will tell the agent to use either ptserver1 at port 21233, or ptserver2 at 21233.

Dispatchers

The dispatcher, like the agent needs to know which config servers are available – you tell it that within the web.xml deployment descriptor in the dispatcher .war file, in there, you need to set the following servlet init parameter:

config.servers = ppserver1:21233;ppserver2:21233

Configuration Servers

The configuration server needs to know of each other – you need to set one server as master, and the other as slave like this:

Configuration for configserver1 at ppserver1:

cluster.server=master

Configuration for configserver2 at ppserver2:

cluster.server=slave

cluster.server.master=ppserver1:21233

You tell the first server that it is a master server, and the second that it is the slave, and that the it can find the master server at ppserver1, port 21233.

Session Controllers

The session controllers needs to be aware of each other, so you tell one of them to listen for connections from mirrored servers, and you tell the other one to connect to the first one.

Configuration for sessionctrl1 at ppserver1:

mirror.listen=true

mirror.listen.port=12234

Configuration for sessionctrl2 at ppserver2:

mirror.servers=ppserver1:12234

Here, you tell the first session controller to listen at port 12234 for connections from other mirrored session controllers, and the second session controller is told that it needs to connect to the first one.

Multiple Separate Clusters of Session Controllers

It is possible to have a setup, where there exist multiple separate clusters of session controllers – e.g. a cluster for internal users, and another for external users.

You can either mirror all sessions between all session controllers like described in the previous section, or you can separate each cluster totally from each other so any potential replication problem in one cluster has no way of affecting the other cluster.

To separate the two, set the “clusterID” attribute for all session controllers in the ”internal” cluster to 1, and set it to 2 for all session controllers in the “external” cluster. This will ensure that all sessions created by a session controller in the “internal” cluster will have a cluster ID of 1, and all sessions created within the “external” cluster will have a cluster ID of 2.

Then, if you have an application that is shared between both internal and external users, you can configure the agent to connect to both clusters of session controllers, so it can access sessions from both internal and external users simultaneously.

Begin by setting it up to connect only to the “internal” cluster as you normally would (by setting the ptsserver/sessioncontrollers property to point to all the session controllers in the “internal” cluster.

Then, add the 2 properties “clusters=2” and “cluster.2.sessioncontrollers=xxxx:port;yyyy:port” where xxx:port;yyy:port is the list of session controllers in cluster 2 (the “external” cluster).

This way, the agent will use a session controller in the “internal” cluster by default, but if it receives a session ID which has cluster ID 2 in it then it will use one of the session controllers in cluster 2 (the “external cluster) to access / manipulate the session.

In this way, an agent can access more than one cluster simultaneously, while keeping each cluster totally unaware of the other.

Dynamic Loadbalancing

If you wish to share the load between multiple servers, you can configure the order that servers are specified in differently for different agents. E.g. let half the agents have config.servers=nio://server1:21233;nio://server2:21233 and the other half have config.servers=nio://server2:21233;nio://server1:21233 thus “manually” loadbalancing them.

This quickly becomes tedious to do in a large scale setup, and instead you can use dynamic loadbalancing – this way you tell all agents to use the same connection URLs but you prefix it with “loadbalance:”, e.g. config.servers=loadbalance:nio://server1:21233;server2:21233.

Dynamic Loadbalancing is supported by clients connecting to config server, log server, session controller and useradmin servers. But do note that e.g. loadbalancing between multiple log servers although working perfectly can be quite a hazzle since it is impossible to predict on which log server your logging output from a particular agent will end up at.

Benefits and Drawbacks

It is important to recognize the benefits and the drawbacks of using dynamic loadbalancing.

It is significantly easier to configure dynamic loadbalancing than doing its manual equivalent, and adding a server to a cluster means you only have to do enter it once place instead of everywhere you have a different order of servers.

When using dynamic loadbalancing, each client will have a connection to all available servers, thus using up more socket connections and cluttering the log with more information. More socket handles are being used, so operating system limits will have to be increased accordingly.

But if a server goes down or later is brought back up, then the load (measured in number of connected clients) will be shared equally between all currently available servers in a cluster.

How it Works

A client participating in dynamic loadbalancing is in reality connected to all available servers, and will have a single active connection and the rest will be passive. A server, e.g. config server component is not logically aware of passive connections existing at all – this is handled by the communication framework used by each server.

Upon startup, a client creates a passive connection to all available servers, and then queries each server in turn for how many current active connections it has. It will then select the server with the lowest number of active connections, and ask it to active the previously passive connection. If there are more than one server with the same (lowest) number of active connection, a random one will be picked and used.

This connection is now used actively, while the others are latent and have no traffic except the occasional ping to check the server is still present.

If there are any of the configured servers which the client is unable to connect to, it will (every 30 seconds) attempt to connect to these servers.

Every 10 minutes, the client will re-query all connected servers to check if the load on them has changed, and if one of the passive servers has at least 3 fewer active connections than the currently active server, then the client will switch active server, by making the new one active and disconnecting from the old one (it will 30 seconds later reconnect to it, but now as a passive connection).

If the server which has the active connection becomes unavailable, the client will immediately re-query the remaining servers for their current active connection count, and select the one with the fewest connections making it active.

When the server becomes available again, the clients will re-distribute their load across all available servers.

Example with and Without Dynamic Loadbalancing

You have a setup with 4 servers and 200 agents. The configuration looks like this:

config.servers=server1:21233;server2:21233;server3:21233;server4:21233

Each of the 200 agents will attempt to connect the the first server, and if it is available it will no longer try to connect to any additional servers, but instead simply use this server. Assuming all 4 servers are up at the time the agents connect, the number of socket connections for each server will look like this:

Server1: 200 sockets

Server2: 0 sockets

Server3: 0 sockets

Server4: 0 sockets

If server1 becomes unavailable for any reason, all agents will move to server2, and when server1 becomes available again, no connections will move back to it.

With dynamic loadbalcing, the configuration looks like this:

config.servers=loadbalance:server1:21233;server2:21233;server3:21233;server4:21233

Each of the 200 agents will connect to all 4 servers, but select the one with the fewest active connections for itself. The number of socket connections for each server will look approximately like this:

Server1: 200 sockets, 50 active, 150 passive

Server2: 200 sockets, 50 active, 150 passive

Server3: 200 sockets, 50 active, 150 passive

Server4: 200 sockets, 50 active, 150 passive

Multiply that by different type of connections; config server, session controller, logserver and useradmin server and each server will have in total 800 sockets open, 25% of them active.

If server1 becomes unavailable, its clients will redistribute themselves between the remaining servers, like this:

Server1: down

Server2: 200 sockets, 67 active, 133 passive

Server3: 200 sockets, 67 active, 133 passive

Server4: 200 sockets, 66 active, 134 passive

When server1 is back up, it will revert back to approximately 50 active connections for each server.

Configuration

For all agents including the dispatcher (and the session controller) the following properties can be changed, refer to the detailed description at the end of this document.

lb.changeactive.differenceinconnections=3

lb.reconnectinterval=30

lb.switchactiveinterval=600

Note that when changing them online, they only affect newly created connections, not existing connections.

Recommendations

We only recommend using dynamic loadbalancing if you have a system with significant load, or a significant number of connected agents. If you only have e.g. 10 agents and 2 servers in a cluster it is usually not worth the hazzle to use dynamic loadbalancing, but if you have a system with e.g. 4 servers in a cluster and several hundred agents, then it is a very good idea to use dynamic loadbalancing.

Monitoring Loadbalancing

It is quite easy to monitor (and manipulate) the load distribution between servers using the Ceptor Console

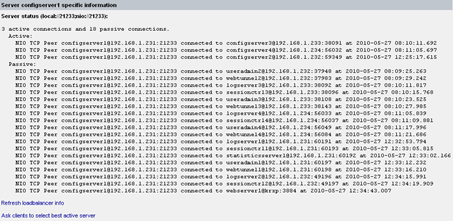

If you go to Server Status and view Details for a particular server, e.g. configserver1 then you can select "Loadbalanced connections info" and you will see something similar to this:

Here, you can either refresh the view, or ask all currently connected clients (passive or active) to reconsider their choice in active server by clicking on “Ask clients to select best active server”.

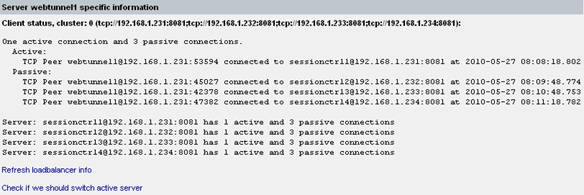

For an agent, you can also select “Show sessioncontroller loadbalancing info” which will give you information similar to this:

Here, you can see the agents information about which session controllers have which number of active and passive connections to other agents.

You can also see the specific information about which servers this agent currently has a connection to.

© Ceptor ApS. All Rights Reserved.